Artificial Intelligence (AI) is changing almost every technology around us, and perhaps the hottest topic is AI hacking. From penetration testing with automated tools to the prospect of AI-based cyberattacks, the subject is intriguing and terrifying at the same time. Meanwhile, AI is also appearing as an effective weapon for defenders—aiding cybersecurity professionals to detect vulnerabilities, patch vulnerabilities, and even educate the next generation of white-hat hackers.

At Pensora, we’re investigating how technologies such as AI are transforming industries—and cybersecurity is no different. This article delves deeply into AI hacking, its potential, ethical considerations, tools used, and whether AI is really capable of hacking phones, networks, or even entire systems.

Key Takeaways

- AI hacking is transforming cybersecurity as attacks and defenses are being automated.

- Ethical hackers are employing AI hacking tools to exploit vulnerabilities quickly and efficiently.

- AI can learn and adapt, which makes it valuable to ethical hacking as well as to malicious attacks.

- Organizations and schools are trying out AI immersion hacking as a form of training.

- The emergence of AI hackers introduces ethical considerations, privacy concerns, and cybersecurity threats.

- The future of AI in hacking hinges on how to balance innovation with responsible application.

What is AI Hacking?

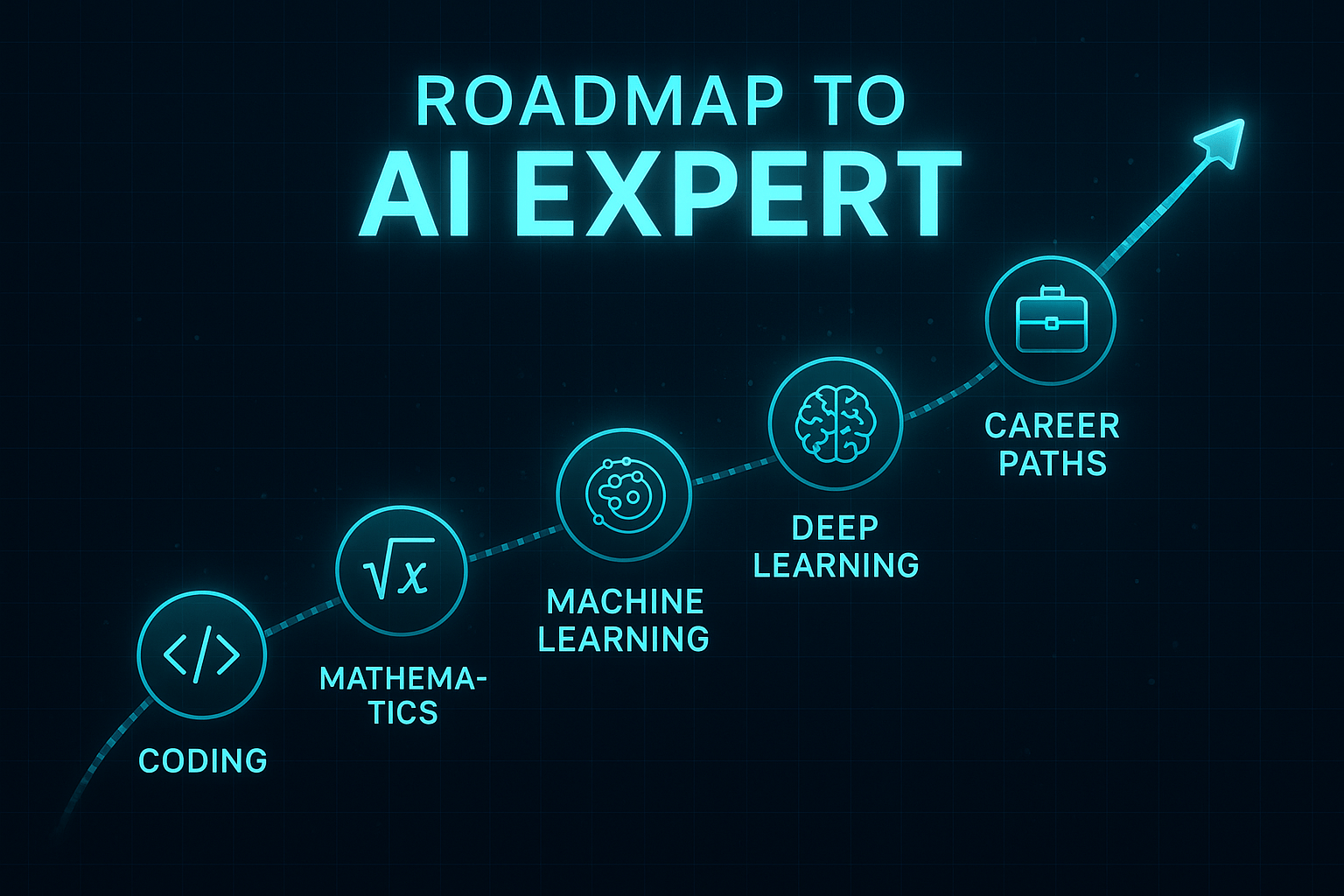

AI hacking is the exploitation of artificial intelligence algorithms in discovering, taking advantage of, or guarding system weaknesses. Unlike conventional hacking, which depends on manual scripts and code running, AI hacking makes use of machine learning, deep learning, and natural language processing to automate tasks and make choices instantaneously.

This enables AI to:

- Scan thousands of computers for vulnerabilities in a matter of seconds.

- Learn from previous hacking attempts to optimize future approaches.

- Create phishing attacks that imitate human speech perfectly.

- Intelligent malware creation that evolves to counter defenses.

Meanwhile, good guys in cybersecurity and ethical hackers are employing AI hacking for positive purposes—designing smarter systems that can protect against cyber threats quicker than humans could.

AI and Ethical Hacking: A New Frontier

One of the most exciting uses of AI hacking is in ethical hacking, where cybersecurity experts mimic attacks to determine vulnerabilities before attackers can take advantage of them.

Some authors are even employing AI to author books and training materials on ethical hacking. These materials integrate historical penetration testing methodologies with methods enhanced by AI, demonstrating how machine learning can reveal vulnerabilities in networks, applications, and devices.

In this regard, AI is a friend, not a foe—assisting ethical hackers in enhancing detection, speed, and precision.

Can AI Hack Phones?

The question most asked might be: Can AI hack phones?

Technically, AI itself does not “hack” phones as they like to show in Hollywood blockbusters. But yes, it can help hackers find vulnerabilities in mobile operating systems such as Android or iOS. AI can:

- Identify vulnerabilities in mobile apps.

- Create convincing phishing messages through SMS or messaging applications.

- Brute-force attempts against less robust security features.

And yet, AI is also being leveraged by cybersecurity professionals to harden phone security. Biometric sign-ons, mobile banking apps detecting anomalies, and fraud protection are all driven by AI.

And so while the reply is in the affirmative that AI can be used to hack phones, it is equally accurate that AI is securing billions of phones across the globe.

AI Immersion Hacking in Schools and Training Programs

There is a growing trend in AI immersion hacking—a methodology in which schools, universities, and training entities employ simulations based on AI to instruct cybersecurity.

Rather than static exercises, students are now able to engage with AI-driven attack simulations that adapt in real time. This offers a more lifelike setting for learning:

- AI can mimic phishing campaigns with almost perfect fidelity.

- Students are able to practice identifying AI-generated malware.

- Adaptive attack simulations test critical thinking under pressure.

Others even call it school hack AI, where students learn how hackers employ AI and, importantly, how to counteract it.

AI Hacking Tools: A Double-Edged Sword

Several AI-based hacking tools have emerged, both for legitimate use and possible misuse. These tools can:

- Scan big networks for open ports and vulnerabilities.

- .Create synthetic identities for phishing and fraud.

- Identify security system patterns and change attacks accordingly.

Examples are:

- DeepExploit : A penetration testing AI tool that identifies vulnerabilities automatically.

- MalGAN :Employing AI to generate malware that evades detection systems.

- AI-powered phishing generators : Create tailored phishing campaigns with NLP.

These tools are invaluable to ethical hackers, but deadly in the hands of malicious ones. This emphasizes the need for rigorous regulation and ethical rules in AI hacking.

The Emergence of the AI Hacker

The AI hacker is no longer science fiction. Presently, AI can automatically detect weaknesses, take advantage of them, and even learn from unsuccessful attempts. Although still under heavy human control, it raises essential questions:

- Can autonomous AI hackers become a reality in the future?

- If AI is capable of hacking, who holds responsibility for accountability—the developer or the AI?

- Will cyber war become AI-versus-AI conflicts?

As more organizations embrace AI-powered defense systems, we could witness the emergence of AI-on-AI cybersecurity warfare, where smart systems attack and defend without the need for human intervention.

Risks and Issues Surrounding AI Hacking

The development of AI hacking is also accompanied by opportunities and risks.

Risks are:

- Job replacement : Part of the cybersecurity work may be automated.

- Weaponization : Attackers may create AI-empowered malware.

- Bias in AI : AI, when trained on biased data sets, might ignore some vulnerabilities.

- Privacy concerns : AI hacking usually depends on the examination of huge sets of personal information.

The solution is how to make sure that AI hacking is utilized fairly and responsibly, with a balance between innovation and cybersecurity.

The Future of AI Hacking

In the future, AI hacking will be at the center of cybersecurity attacks as well as defenses. The question isn’t so much whether AI can hack, but rather how we decide to employ it.

- For defenders: AI will automate threat discovery, analyze malware behavior, and build more robust systems.

- For attackers: AI may drive highly advanced, adaptive attacks that are hard to anticipate.

- For learners: Schools and universities will increasingly embrace AI immersion hacking methods to train the next generation of cybersecurity professionals.

At Pensora, we envision the future relying on ethical AI hacking—where training and tools are utilized to enhance digital defenses instead of taking advantage of them.

FAQ: AI Hacking Explained

Q1: What is AI hacking?

AI hacking refers to the application of artificial intelligence to automate, quicken, or compound hacking efforts—both good and bad.

Q2: Can AI write ethical hacking books?

Yes. AI is utilized to create ethical hacking materials, tutorials, and even full books that incorporate customary techniques alongside AI-powered cybersecurity measures.

Q3: What is AI immersion hacking?

It’s a form of training in which AI-based simulations mimic real-world cyberattacks, and students and professionals get to rehearse defending against them.

Q4: Can AI hack phones?

Yes, AI can be used to hack phones by targeting weaknesses in apps or systems, but it can also be employed to enhance mobile security.

Q5: What are some common AI hacking tools?

DeepExploit, MalGAN, and AI-based phishing simulators are widely used for penetration tests and research.

Q6: What is an AI hacker?

An AI hacker may be a human being using AI hacking software or an autonomous AI system that can perform hacking operations on its own.

Q7: What are the dangers of AI hacking?

Major dangers are the weaponization of AI malware, invasions of privacy, bias in AI training, and job loss in cybersecurity.

Conclusion

AI hacking is a fast-evolving industry that mixes innovation with threat. Although it provides potent tools for ethical hacking, penetration testing, and training, it is also incredibly dangerous if used incorrectly. From the debate about whether AI can hack cell phones to the emergence of AI hackers and high-tech AI hacking tools, the technology sits at the edge of cybersecurity.

The future of AI hacking hinges on responsible adoption. Through an emphasis on ethics, transparency, and training, we can make sure AI makes our cybersecurity stronger, not weaker.

At Pensora, we keep working on these key questions—because the future of cybersecurity might be relying on us right now.

益群网

益群网:终身分红,逆向推荐,不拉下线,也有钱赚!尖端资源,价值百万,一网打尽,瞬间拥有!多重收益,五五倍增,八级提成,后劲无穷!网址:1199.pw